twitter.com/mogwai_poet/status/1060286856493813760

- “A robot arm with a purposely disabled gripper found a way to hit the box in a way that would force the gripper open”

OMG Skynet is born

- “Agent kills itself at the end of level 1 to avoid losing in level 2”

No it’s fine, we’re safe

People complain that computers don’t do what they are told. The truth is the opposite, they do exactly what they are told. The real problem is that we as humans badly set the environment/parameters/questions.

Which is why a fire and forget SOAR approach isn’t always best, consider adding interactive steps:

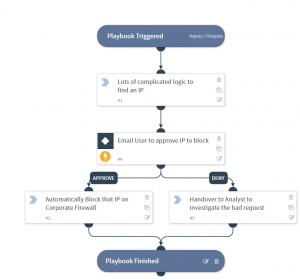

- SOC/CIRT analyst guiding the playbook via the WebUI

- Comms over email/slack/sms/other

- Interact with non SOC/CIRT user, e.g. let a business owner control the playbook flow

Look at the following two approaches, and decide which is safer.

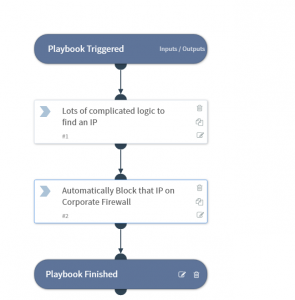

Option 1 – Automatically find the alert, auto extraction, auto enrich, auto decision making, auto block

Option 2 – Automatically find the alert, auto extraction, auto enrich, auto decision making, but ask a user (email, slack, sms) to validate

Andy